SIGGRAPH Asia 2020 Virtual Conference saw an insightful presentation by Epic Games head of L.A Lab David Morin on virtual production; the emerging technology that has taken the world of motion pictures by storm.

Widely touted as the game changer, the buzz about virtual production is being increasingly felt at Asia’s largest Computer Graphics and Interactive Techniques event. Morin, who is Epic Games’ industry manager for film and TV, where he spearheads efforts from the Unreal Engine team to further its development and adoption across the film and television landscape delivered a comprehensive presentation to explain the way “in-camera visual effects” technique is becoming a mainstay and fundamentally changing film and TV industry for good.

Diving into the genesis and history of this emerging technology, Morin alluded to a famous phrase from 10 years ago; ‘Software is eating the world.’

Putting the rise of software penetration into context, he detailed, “It’s been 50 years since the invention of the microprocessor. 30 years since the rise of the internet. 10 years since the cloud and today it’s fair to say that (far from eating it) ‘software powers the world and it does power also the media industry and motion picture.”

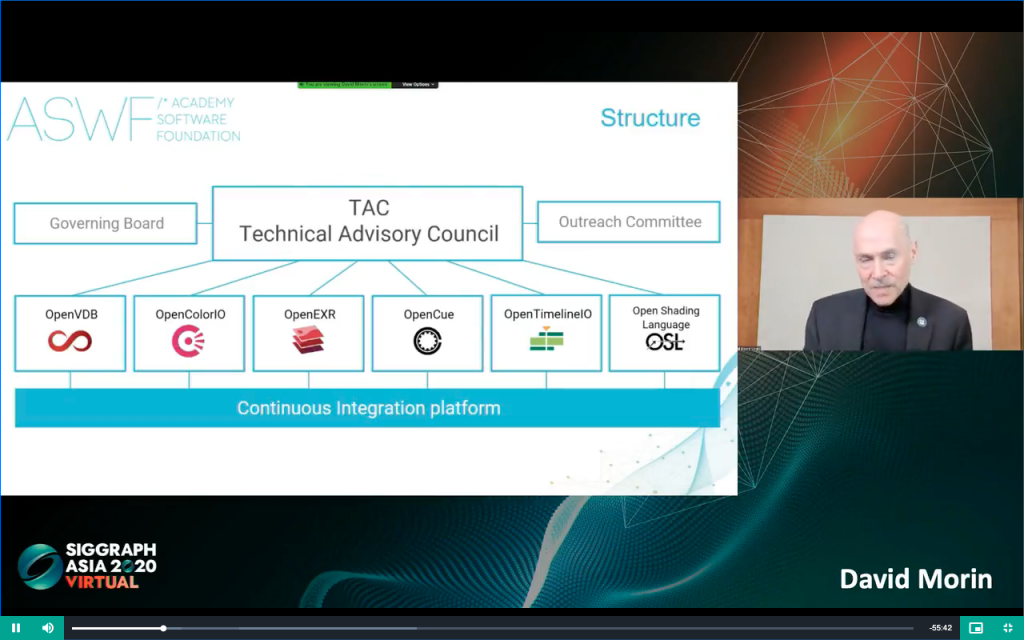

Academy Software Foundation

With software taking precedence, Morin informed that the Academy of Motion Picture Arts & Sciences had partnered with The Linux Foundation to create the Academy Software Foundation two years ago to provide a neutral forum for the development of “Open Source Software” for the industry.

Enumerating the open source programmes available currently, Morin noted, “We have six projects that are hosted at the Academy Software Foundation. If you work in this industry, you probably know some of them which are bringing a lot of good efficiencies in the process of making movies; Open EXR, Open Color IO, Open Cue, Open VDB, Open Timeline IO and Open Shading Language. “

He apprised that the foundation is now set up to develop all these projects into one single continuous integration platform. He shared, “So each one of these projects were developed separately by their own companies where they came from and now they’re on in the single platform and that platform that is open source can be adopted by studios of all sides who want to get into open source software development. Each project has its own engineers and they all regroup into the technical advisory council, which is the heart of the foundation and the brain where the engineers make all the decisions about what to do with the projects.”

In-Camera Visual Effect and Real-Time Workflows Using Unreal Engine to Produce Film & Television Content

Setting the tone for the slide on the history of Unreal Engine, Morin shared, “The company was created in 1991 by Tim Sweeney who is a software engineer himself, a conservationist and still our CEO today; the guiding light; the one that set the direction for the company. He had a vision since the beginning about building the meta-verse one step at a time. And we’re all aligned with him to make that happen. The Unreal Engine itself was a set of tools that were used at Epic to do the first games that Tim developed that were packaged into a software available to others in 2001. RealTime being at the core of the source code on GitHub. There’s a very close affinity between Epic Games and open-source movement. There’s been more than 500 games building Unreal Engine on the partnership model and Epic itself has built 45 games in-house including Fortnite.”

Fortnite is the game built on the Unreal Engine 4; the same software that is actively powering film and television production too. Speaking about its myriad and diverse applications, Morin shared, “Unreal Engine is used across many industries, “Not only for films and TV, it’s used in broadcasting, live events, design and manufacturing, simulation and training architecture and more.”

With a multiplicity of its applications and potency, the engine’s biggest power point is that it remains free of cost for all users. Morin expounded, “Unreal Engine is free for creators. So you can download our main engine right from the website and you can start using it just like that.”

Explaining how it is used in film and television production, Morin noted, “It is used increasingly and I’m glad to say here for the first time that we crossed the hundred and sixty films and television projects that have been done using Unreal Engine or are currently in production using Unreal Engine to come out in the next year. The use of Unreal takes many forms. It’s used for animation, live action, virtual production; which is the umbrella term that we use for a lot of different use-cases for virtual sets, VR Scouting, pre-visualization and all its different uses, in camera visual effects, performance capture and final render. The engine is used in all stations of movie production.”

He informed that the adoption of this technology can be traced back to the top A-list filmmakers Peter Jackson, Jim Cameron and Steven Spielberg who held a virtual camera and this was before the time of Unreal. He shared, “They were using the technology that they could to try to preview while they were shooting their visual effects and in some way and this was previz and it was never final but it was useful because you could do it in pre-production at the very low cost all kind of experiments that you couldn’t do later when it cost a lot of money.”

Morin informed that although Avatar was a great case study of the usage of virtual production but it was an expensive and complex set up. He continued, “Things started to change in 2014 when Facebook acquired Oculus and VR became a thing. Many filmmakers did great experiments with VR using Unreal Engine.”

The academy in their wisdom realised that this new form of storytelling was important to recognise. Before that, when a pioneering storytelling technique was realised, it was for Pixar for the invention of computer animation. Post that, Virtual Production or VP started coming online with virtual set with the use of Unreal Engine in real production.

Morin shared, “In 2018, we saw one of the first one with John Wick 3 where they built an entire glass house in Unreal Engine. This led to the virtual scouting because once we had a set in Unreal, we developed tools that allowed filmmakers to go in VR and explore a set interface that is now adapted to them with information like lenses. They will be able to zoom according to the focal length, to measure distance on their sets, interact and move things on the set and you can do that in a multi-user session which means you can have multiple people in their goggles on the sets at the same time and at the same time, a camera can be shooting the set.”

Previsualization

Utilising the technique, productions are able to move from one convincingly detailed and photo-real environment to another without leaving the stage.

Another vertical in movie making where Unreal is being used is the pre-visualization where the companies in the department do previz to experiment with the design to plan better. Citing an example, Morin shared, “Halon Entertainment recently used Unreal Engine for their Ford vs Ferrari previz.”

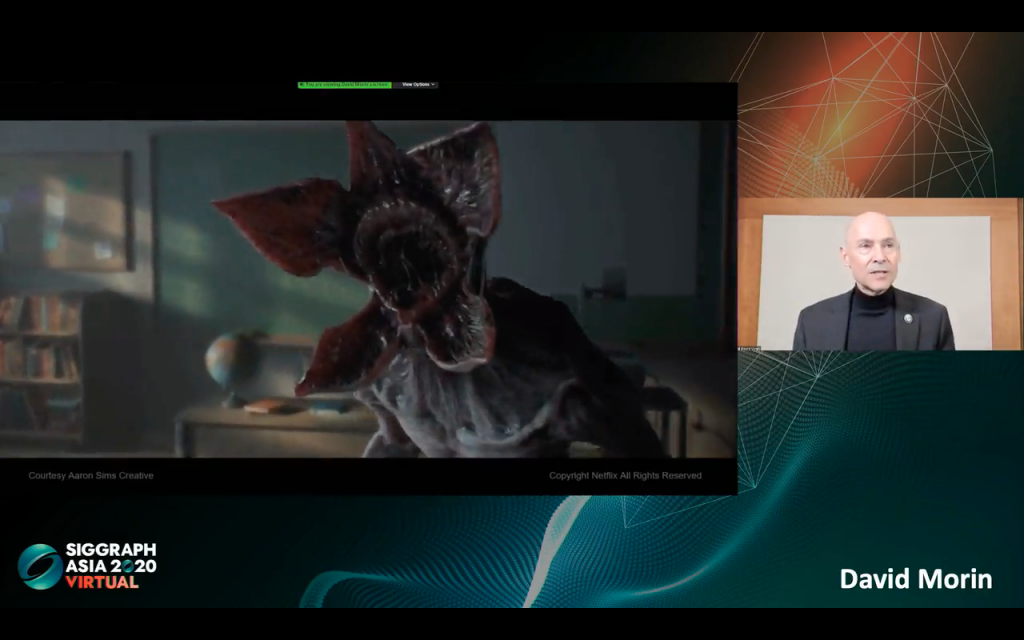

Creature Design

Aaron Sims Creative developed a test for Netflix’s Stranger Things on the character of Demogorgan.

Talking about the development of the character and the role of the engine in its creation, Morin shared, “It ran in real time inside Unreal and you can see the level of quality that can be reached now even in the production design phase where you can go in real-time with depth of field and all the rendering quality in the shaders that you find associated with renders that take hours of frame, such as details of the skin. So it’s a game changer.”

In-Camera Visual Effects

In camera visual effects gives filmmakers the flexibility of visual effects process as well as the immediate realism of actual photography. It is a big full menu workflow now that essentially involves every element of virtual production where you can have a real-time photo-real set in front of you.

DNEG VFX supervisor Paul Franklin shared, “We were at the set of Dimension where we have been shooting a virtual production test with this high-resolution LED wall and what this technology allows us to do is to create sets, environments and landscapes; all on the same stage. So rather than physically building a set, we create a computer-generated image of the environment and play it back on this screen and the really clever thing is that; unlike a regular scenic backdrop or just a rear projection, this environment is a live 3D world. And so we’re creating a virtual stage, but then filming it all in camera because virtual sets have existed for a long time in visual effects using green screen where we have to then replace the green later on in post-production, but this adds an extra dimension to the process and makes it so much more immediate and you get all the benefits of live for photography on the day as it were and essentially you can get everything without having to do any additional post-production.”

Franklin shared that another thing it offers is the flexibility of working with computer graphics and computer animation. We can add effects animation like explosions, fire, lighting changes; all of which would be very difficult, time-consuming and potentially even dangerous to do on a physical set. So it offers the best of both worlds.

When you build the in-camera visual effects set up, you have to invariably build your video wall. The important part takes place in the virtual world when you build a virtual version of all your tiles and then you move that into your world as a way to specify what’s going to be displayed on your video wall.

Morin informed, “We have developed features within Unreal to split the video wall in any configuration you want and in segments that can be assigned to different machines that can render different sections of the wall. The wall itself is used both as a background but also as a lighting instrument and there’s a lot of features that have been included in the engine to achieve that. Camera view frustum is a process by which you render what the camera sees at a much higher resolution.”

You can also include a green screen on the video wall itself as Unreal offers a range of features in the compositing section of the engine that can be used to do live compositing on Green Screen if you so desire on the set in case filmmakers are seeking a safety net.

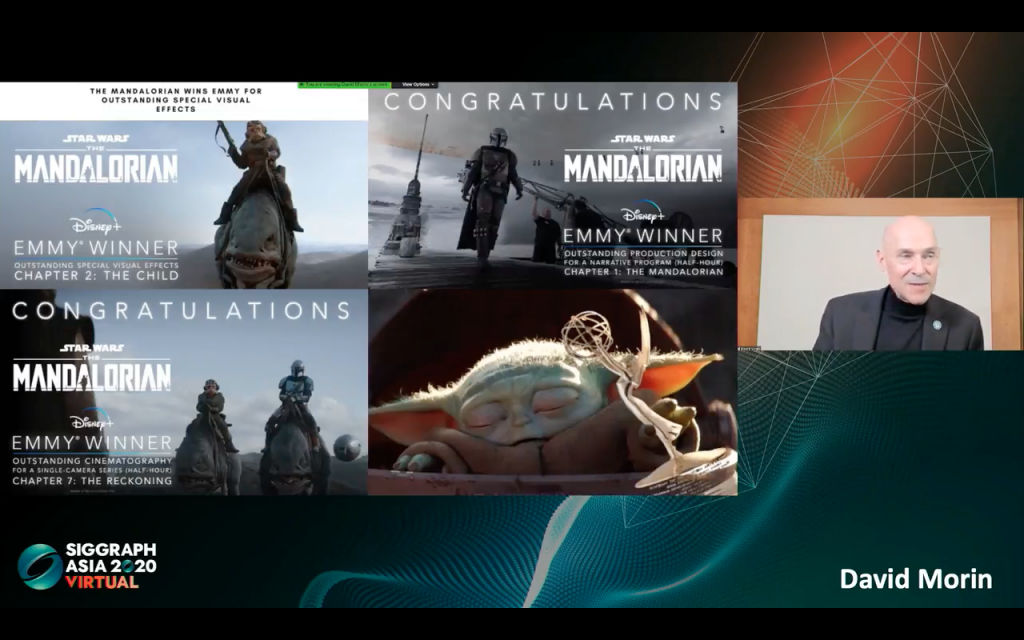

Morin informed that these features were essentially built for the award-winning Mandalorian season one that was produced using in-camera visual effects workflow in an innovative way. Speaking about the way the series was shot, he shared, “The stage was 21 feet tall and 75 feet in diameter with juncture between the ceiling and the wall and the motion capture cameras that captured the real camera position very precisely.”

While the earliest adopters that Unreal Engine team helped were the teams of the Mandalorian and Westworld prior to the pandemic, the success of those projects led many studios across the world to give this technology a try.

Weta Digital’s Meerkat

He shared that the Meerkat short film, recently created by visual effects powerhouse Weta Digital, showcases Unreal Engine’s new production-ready Hair and Fur system.

Speaking about the tools available in the open source game engine, he shared, “There’s the fur, there’s a new tool to make to make clouds and skies in real time. There’s a tool for water. There’s a lot of tools for games. I highly encourage you to go see the website and download the software. Overall, your success is our success. That’s the motto at Epic Games. We develop the tools so that you are successful at doing what you’re doing and moving to the next step. We provide all kinds of tools.”

With software developments in real-time game engines, combined with hardware developments in GPUs and on-set video equipment, filmmakers are now able to capture final pixel visual effects while still on set – unlocking new levels of creative collaboration and efficiency during principal photography. These new developments allow changes to digital scenes, even those at final pixel quality, to be seen instantly on high-resolution LED walls – an exponential degree of time savings over a traditional CG rendering workflow.